Remove the artifice from intelligence: the real threat of Artificial Intelligence (AI) is the presumption that we know what intelligence is and how it operates.

The definition of Artificial Intelligence in Wikipedia seems a good-enough start:

Artificial intelligence (AI) is the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals

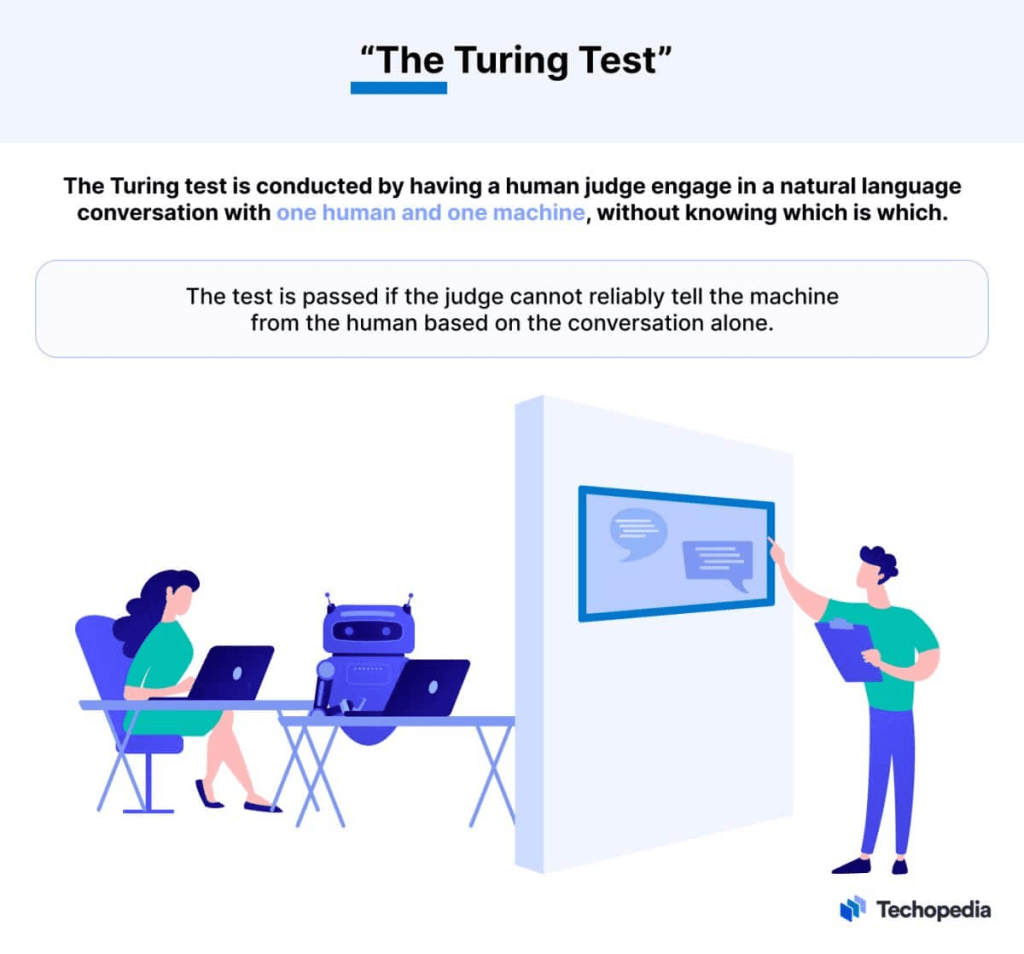

In my book, artificial intelligence could not be intelligence, in the same way I would argue that general intelligence (or G-Factor) was a false construct (another argument for another day). This is so even though it can model various aspects of human intelligence, and hence perform as if the system on which it was running were intelligent, or could be mistaken as such. The last phrase here pays homage to what we call the Turing test (below are the first two paragraphs of the Wikipedia page linked to it:

The Turing test, originally called the imitation game by Alan Turing in 1949, is a test of a machine’s ability to exhibit intelligent behaviour equivalent to that of a human. In the test, a human evaluator judges a text transcript of a natural-language conversation between a human and a machine. The evaluator tries to identify the machine, and the machine passes if the evaluator cannot reliably tell them apart. The results would not depend on the machine’s ability to answer questions correctly, only on how closely its answers resembled those of a human. Since the Turing test is a test of indistinguishably in performance capacity, the verbal version generalizes naturally to all of human performance capacity, verbal as well as nonverbal (robotic).

The test was introduced by Turing in his 1950 paper “Computing Machinery and Intelligence” while working at the University of Manchester. It opens with the words: “I propose to consider the question, ‘Can machines think?'” Because “thinking” is difficult to define, Turing chooses to “replace the question by another, which is closely related to it and is expressed in relatively unambiguous words”. Turing describes the new form of the problem in terms of a three-person party game called the “imitation game”, in which an interrogator asks questions of a man and a woman in another room in order to determine the correct sex of the two players. Turing’s new question is: “Are there imaginable digital computers which would do well in the imitation game?” This question, Turing believed, was one that could actually be answered. In the remainder of the paper, he argued against the major objections to the proposition that “machines can think”

The key thing here is that the computational machinery must seem as if, given that we cannot see its difference to a human being, can sound like a human person: to do so, it must be able to reproduce artificially the written, and at its most advanced the sound effects that a human reader (or listener) believes to be ‘natural language’ natural language, and the ability to replicate how a human deals with questions or problem scenarios requiring thought. Note that we do not evaluate this by the capacity to produce correct answers but also to produce erroneous ones in the same way a human might.

The entirety of the test is built upon the binary distinction of what is natural and what artificial, while propounding that if a system can artificially reproduce the features of natural thought, on the one hand, and language, on the other, it stands a chance of ‘passing’ as if it were the natural product of human practice in decision-making, problem-solving, learning, and conducting an argument as humans rather than logic machines would. I suppose the truth is that, like all binary oppositions, the natural-artificial binary, is a false paradigm in being applied to thought. Some humans act as if they were machines by simplifying the features of human thought processes, particularly in the omission of the operations of self-interest and desire, or indeed of refined paradigms in human development like communalism and altruism. No decision is entirely natural, none entirely artificial – as always the binary is often the names of the poles of a range of behavioural products in which the various aspects of thought contained are configured from varying degrees and intensities of each.

Turing, after all, never seemed quite to master human interaction, across the whole range of human neurodivergence, himself and his test reflects that aspect of self, lacking the nuance he was famed for lacking. AI is seen sometimes a threat to humanity but then, most of humanity seems to think that most of humanity (excluding self and self-like others) is a threat to them, their limited model of what it is to be human. And there is the point, artificial intelligence is only believed to be of use if it fits the paradigm of what a few people think of as ‘natural’ behaviour. At the moment, this will be the model of human selves constructed by global capitalism which pretends that global benefits arise from global characteristics of selves that are all alike in that they are selfish, self-orientated, wasteful, greedy, amenable more to the influence of deceit than reason. This is the world that spawned advertising and demand-creation, economies of scale as a driver to business expansion, and a lack of respect for natural resources, resulting in their progressive commodification – think how the story of water in the coming century will replicate that of oil.

And think of the ‘imitation game’. Like all good games it is based on what psychologists call the theory of mind – the knowledge children acquire that their mind is a black box to others, as theirs is to the child. Such minds operate using game plans in which deceit is crucial (there are are good studies that show that primates too play such games, which has led some to call them ‘natural’ and definitive of human behaviour). But this is to play the other game that the Dawkins of our world play – that all non-selfish behaviour is both epiphenomenal (and probably a mask for selfish motives underlying their profession). Such traps are the creation of Eurocentric and capitalist-culture-centric assumptions. Meanwhile military AI is trained on war games based on Mutually Assured Destruction (MAD) and mad motives, business games such as those applied to me as an Economics student at grammar school on similar motives of market competition.

Novelists often play with this idea for the reasons I outline – in the UK Kazuo Ishiguro (and Ian McEwan’s lesser Machines Like Me) most stick in my mind – see my blog on Ishiguro and Wikipedia on McEwan linked to their respective names. AI is so tied to the operation of large scale enterprises – the current Labour Government is attempting to make it the holding plan of its public-private hybrid that it is We Streeting’s idea of the NHS currently. Its early signs are already creating havoc in primary care and secondary care – its assumptions those of a high scale game with no knowledge of what makes persons ‘persons’. Indeed the whole awkward Starmer experiment has tried to substitute the supposed efficiencies of AI presumptions for socialist and communalist principles once allowed in the Labour Party, if never universal.

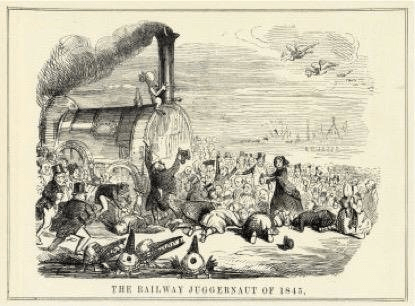

So for me. I could do without AI but to say is to mistake the elephant in thwe room – that one that is really an artificial Juggernaut crushing human lives – GLOBAL CAPITALISM.

With love

Steven xxxxxxxxxxxx